Cold emailing requires some experimentation and learning by trial and error to get it right, trying out different subject lines or various versions of email copy to see what’s working and what’s not. And there’s no better way to find that out than by A/B testing.

Today I have a pleasure to introduce you to A/B tests in Woodpecker, a new feature we’ve just rolled out. First, let’s have a closer look at what elements of your cold email you should test to improve your open and reply rates. And then I’ll show you how to carry out an A/B test step by step based on an example.

Here we go.

What is A/B testing about?

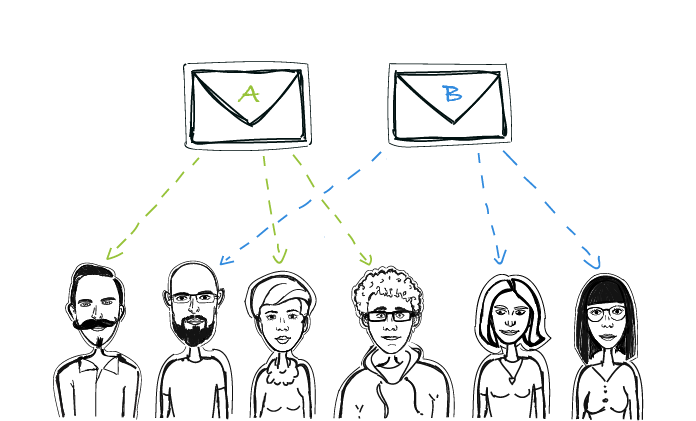

If you’re new to sales and marketing, you might not have heard about A/B tests (or split tests as they are also called). So let me give you a brief overview of what’s the main idea behind it.

To carry out an A/B test your prospect base should be divided into at least two identical subgroups. You can also make it three or four subgroups depending on how many versions you want to test. Each group should consist of the same number of prospects who share similar characteristics, for example, all of them are CEOs of rising startups.

Another important principle of A/B testing is to change one thing at a time. The reason for that is simple: only this way you’ll find out what made the difference. So for example, experiment only with the subject line and leave the rest of the email intact. Or add some tweaks only to the value proposition without touching anything else.

Here’s more about how A/B testing should look like:

A/B Testing in Cold Email: How to Optimize Our Copy to Get More Replies?

What elements of my cold email campaign should I A/B test?

The answer depends on what result you want to achieve: whether your goal is to improve the open rate or have more prospects respond positively to your message.

I want to increase my open rate

If you’d like more recipients to open your email, then you should start by testing different subject lines. The subject line is what makes people decide whether your email is worth their time or not. Sometimes adding just a small tweak, like personalizing it, turns out to be a game-changer.

Another part that catches your addressee’s eye when they browse through their mailbox is your email’s intro. Most email clients display the first sentence of the message right under the subject line. It gives recipients a sneak-peek of what they’ll find inside.

I want to get more positive replies

Your prospects open emails from you, but you don’t hear back from them? Perhaps the content of your email didn’t hit the right chord. Maybe the subject line caught your prospects’ attention, but then your email was all about you and your company. Or the value proposition was too generic and didn’t appeal to this particular prospect segment. Or there was no clear CTA, so your prospects didn’t really know what you wanted from them.

The only way to find out what stops people from replying is to test different versions of your email copy and compare the results to spot the winner.

Here’s more about what to A/B test if your open, reply or interest rate could use a boost:

My Open, Reply or Interest Cold Email Metrics are Low, What Can I A/B Test? >>

Also, check:

Cold Email Benchmarks by Campaign Size and Industry: What Makes Cold Emails Effective in 2020? >>

Now that you’ve determined what to focus on in your A/B tests, let’s move to the practical part.

How to carry out an A/B test in Woodpecker?

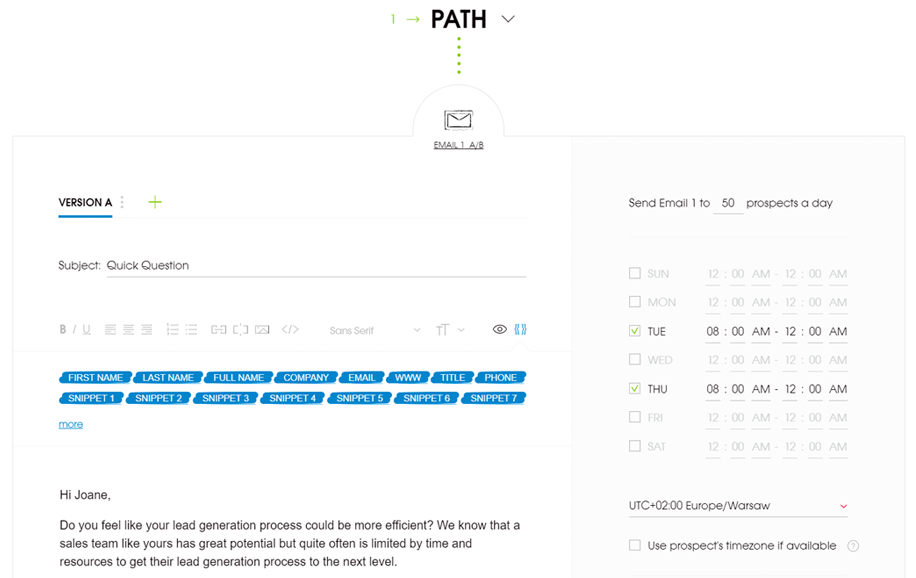

So let’s assume that it’s the subject line you want to A/B test. You have a hypothesis that the original one – “Quick question” – is the cause of a below-average open rate of your last campaign. You decided to test a different approach and try a subject line that relates more to your prospect’s needs, something like “Is your lead gen process efficient?”. Let’s see how you can carry out an A/B test in Woodpecker to see which version will turn out to be a more effective one.

First, log in to your Woodpecker account and create a new campaign. If you don’t have an account yet, sign up for a free 14-day trial:

Step #1 Create different email versions

As you open a new campaign, you start with version A of your first email – this is your original message. You can add more versions of your email in two ways. Either by clicking the green + symbol, or by copying Version A, like in the example below:

So now your opening email comes in two versions: A and B, which differ only in the subject line.

If you wish you can add up to five email versions. In this example, we’ll stick to two.

There’s probably one question that has just popped into your head now…

How will Woodpecker handle the sending?

Your emails will be sent in random order. Woodpecker will shuffle between all the versions, so as to keep a steady sending volume and keep your deliverability rate high.

Mind you: To get meaningful results, we recommend you to send each email version to at least 100 prospects. Therefore, before that, make sure your domain and email address are properly warmed up and you have a good sender reputation.

Step #2 Add follow-ups

Next, add two or three follow-ups to the sequence. Your prospects are busy people, they may not have time to reply to you right away or may simply forget to finally do it. Sometimes they also need a bit more “nurturing” to see the benefits of your product for themselves. That’s why it’s so important to always send follow-ups.

If you’d like to A/B test different versions of a follow-up you can do it as well. The idea remains the same. You can create up to five different versions of a follow-up email in each Path (If-campaigns let you split your campaign into two sequences, called Paths, depending on whether a prospect opened your email or clicked a link in the copy – learn more about it here)

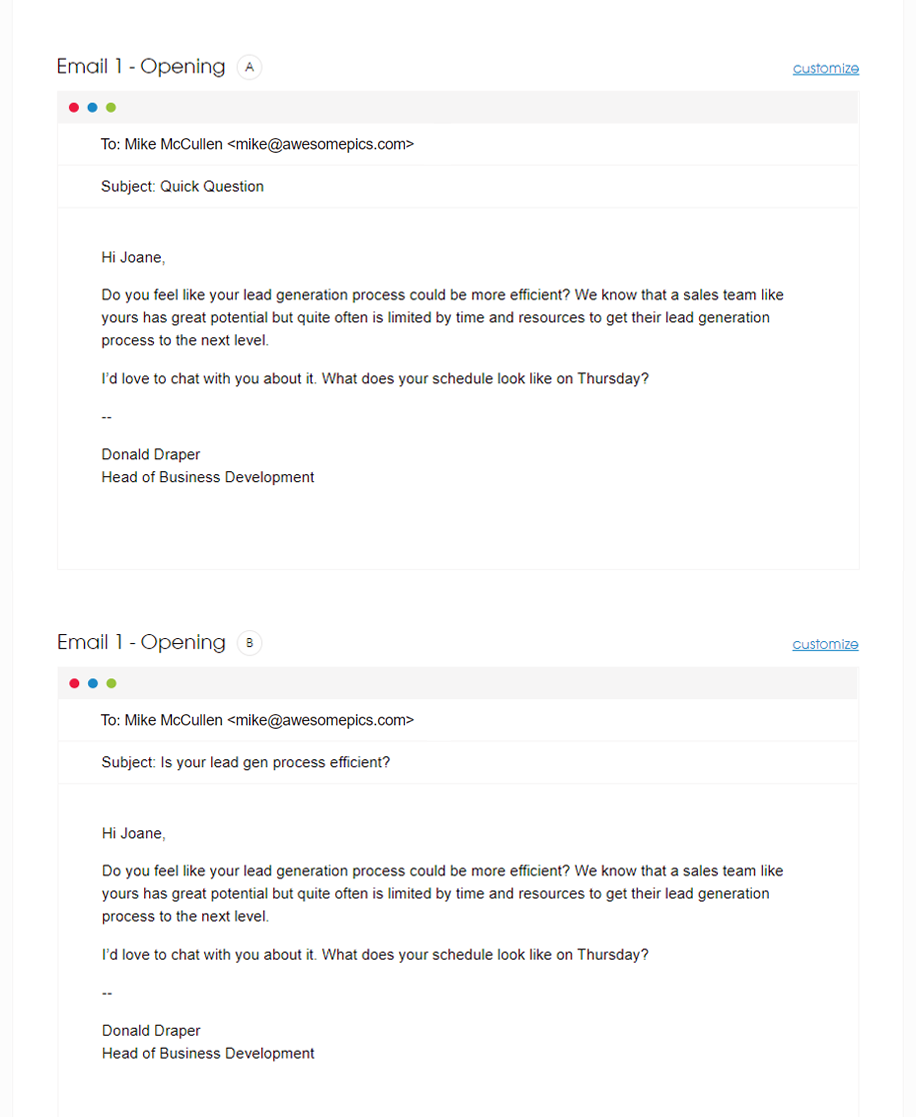

Step #3 Preview the campaign

Before you click send, check how each version of your email and follow-up will look like in a prospect’s inbox.

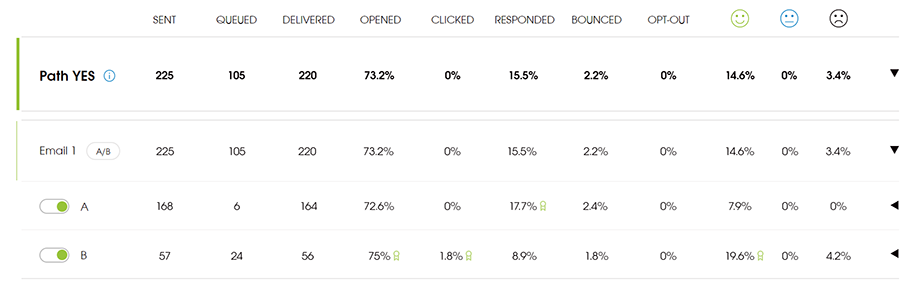

Step #4 Compare the results

Once your campaign is running, it’s time for monitoring and analyzing the results. Open your stats dashboard and compare each version’s performance. The best rates will be marked by a special winner icon.

If in the meantime, you notice that one email version has significantly worse performance, you can turn it off and Woodpecker will stop sending it immediately.

Start testing today

That’s the end of your first A/B test but it shouldn’t be the end of testing. Keep experimenting with various parts of your email copy on a regular basis until you’re satisfied with the results. Additionally, by sending more diversified emails, you’ll also improve your deliverability. That’s quite a desired side effect, isn’t it?

In case you have more questions, read our A/B testing FAQ that hopefully will clear all your doubts.

READ ALSO

A/B Testing in Cold Email: How to Optimize Our Copy to Get More Replies?

Split tests, or A/B tests, are a well-known method for website’s or newsletter’s copy optimization. But cold email copy also needs constant changes if it is to bring optimal effects. Here’s a little about split testing in cold email: which parts of our email can be tested, how can we test most effectively, what tools can we use for convenient comparison of results?

What’s an Alternative Way to A/B Test Your Emails in Woodpecker?

Since we released the new A/B testing feature, it’s become the easiest and most convenient way to carry out a split test of your cold email copy in Woodpecker. However, if you wish you can still do it manually. Let me show you how to do it in a quick and simple way.

10 Factors that Make Our Cold Emails Work (or Not)

If you're sending cold emails, you know that sometimes your messages work amazingly well, sometimes they work OK, and sometimes they don't work at all. And whether your outreach works great, or it hardly works at all, there's always a reason for that. Actually, in most cases, there's a whole collection of reasons for that. Here's a list of 10 factors that you may want to check to discover the cause of your campaign's success or failure, and to improve the effectiveness of your emails in general.