About two months ago Vovik, our Head of Inbound Sales, hosted a live Q&A to share his knowledge of A/B testing in email.

We were surprised at how many questions you guys asked! It looks like the topic of A/B tests in email needs to be explained in more detail, so I teamed up with Vovik to provide even more A’s to your Q’s. Check them out below.

If you’d like to see a quick recap of the webinar, Vovik recorded a 15-minute summary. Watch it here:

Email A/B Testing in Practice>>

And now to the Q&A, ladies and gentlemen.

1. What is A/B testing in email?

When referring to email, A/B testing means creating alternate versions of one of the elements of an email, e.g. its subject line or CTA in the email body, and sending it to two groups of prospects (equal in size and of similar qualities) to see which version will perform better.

2. What can I A/B test in email?

In general, you can test various elements of the email itself, such as the subject line, value proposition or CTA, as well as the delivery time.

3. Why do A/B tests help in email deliverability?

Because they allow you to create a more diversified email content within a campaign.

As you may know, sending huge numbers of emails that have exactly the same copy might alarm spam filters. So anything that can help you diversify the content of your email campaign will serve in favor of good deliverability.

And although it’s not the primary goal of A/B testing, it is indeed a very nice side effect.

4. How long should I run an A/B test?

It’s up to you. You can contact a couple of hundreds of prospects within a few days and then decide which version is better. Considering email provider sending limits and spam filters, it’s better to spread the sending across 3-4 days – this way you can be sure you won’t harm your domain reputation and deliverability.

5. How long do I need to wait until I turn off version B, if it doesn’t perform as well as version A?

You should contact at least 100 prospects with version A and 100 with version B to determine which one really is the winner. The more, the better, and the more accurate results.

6. How often should I run A/B testing?

It’s up to you. If your campaigns don’t perform as expected – it’s definitely time to run some A/B tests and check how your email content influences the open, click and response rates.

Experimenting in general is good and it will help you get better insights about what works best with your specific audience.

7. When using not only versions A and B, but a version C as well would be helpful?

When you have a lot of ideas and all of them seem good – that’s a good reason to add a C version to check which one works best. Heck, you might even create an A, B, C, D and E version if you’d like.

Remember, though, to test only one element at a time. Only the subject line, only the signature, only the email body. Otherwise you won’t be able to tell which of the elements really made the difference.

8. Which version should I stick to in this case?

A and B have different subject lines – that’s the only difference.

A has 2,5% higher response, but lower open rates.

B has 3% higher open, but lower response rates.

Which version should I stick to?

I’d go with version B – the one with higher open rates. Why?

Let’s say you’re sending an email you don’t necessarily need to get a response to – you’d rather people click on the link and sign up for your webinar. So you pick the version with more opens – B.

In a different scenario, you might be sending typical sales cold emails. Your goal is for people to react to your email so you can start a conversation with them. In this case, I’d also stick with version B, because the email body is the same and sooner or later I’m going to get the same results with the B version when it comes to response rates.

Alternatively, you could leave the two versions and see how the rates change over time.

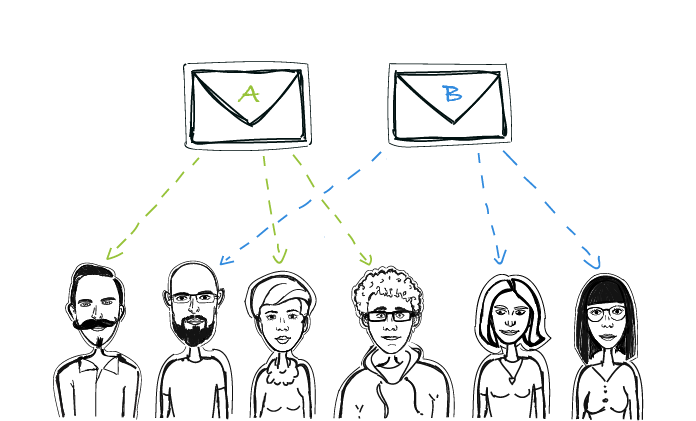

9. Does Woodpecker send out emails rotating between versions? Version A first, then B, then A again?

No, Woodpecker sends the two (or more) versions of email in random order.

First, it randomly (but evenly) selects prospects for version A, next – for version B, then for version C, etc. Each version should be sent to more or less the same number of contacts, but this doesn’t mean it will first send an A version, then B, then A again, etc.

Read more about how to perform A/B tests in Woodpecker>>

10. Is a prospect going to receive one version throughout the whole sequence?

No, one version isn’t assigned to a prospect. They’re going to get random versions of each step of the campaign. They might get version B for the opening email, A for the first follow-up, and then D for the second one.

11. Can I add a version when the campaign is already running?

Yes, you can add a new version at all times.

12. Should I create a new campaign or just add prospects in this case?

If I run a campaign with a couple of email versions, test it for a while, pick a winner version, and get a new batch of prospects to reach out to, is it better to add them to the campaign that’s already running or to start a new one?

If you don’t want to change any campaign settings or email copy, it will be more convenient for you to add them to a running campaign.

Over to you

I hope that after reading this article, you feel comfortable with A/B testing in email. Log in to your Woodpecker account or sign up for a free trial to test it for yourself:

READ ALSO

A/B Testing in Cold Email: How to Optimize Our Copy to Get More Replies?

Split tests, or A/B tests, are a well-known method for website’s or newsletter’s copy optimization. But cold email copy also needs constant changes if it is to bring optimal effects. Here’s a little about split testing in cold email: which parts of our email can be tested, how can we test most effectively, what tools can we use for convenient comparison of results?

Step-by-step Guide to A/B Testing Cold Emails and Follow-ups in Woodpecker

Cold emailing requires some experimentation and learning by trial and error to get it right, trying out different subject lines or various versions of email copy to see what’s working and what’s not. And there’s no better way to find that out than by A/B testing. Today I have a pleasure to introduce you to A/B tests in Woodpecker, a new feature we’ve just rolled out. First, let’s have a closer look at what elements of your cold email you should test to improve your open and reply rates. And then I’ll show you how to carry out an A/B test step by step based on an example. Here we go.

My Open, Reply or Interest Cold Email Metrics are Low, What Can I A/B Test?

Cold outreach needs a lot of experimentation before you get it right. You can tinker with cold email copy, subject line, CTA, and other elements to optimize your cold email. However, how do you know what to focus on?