At Woodpecker we know that split tests, or A/B tests, are a well-known method for website’s or newsletter’s copy optimization. But cold email copy also needs constant changes if it is to bring optimal effects. Here’s a little about split testing in cold email: which parts of our email can be tested, how can we test most effectively, what tools can we use for convenient comparison of results?

A/B Testing: a nutshell revision

I bet everyone knows the idea of A/B testing, but just in case, here’s how it looks like:

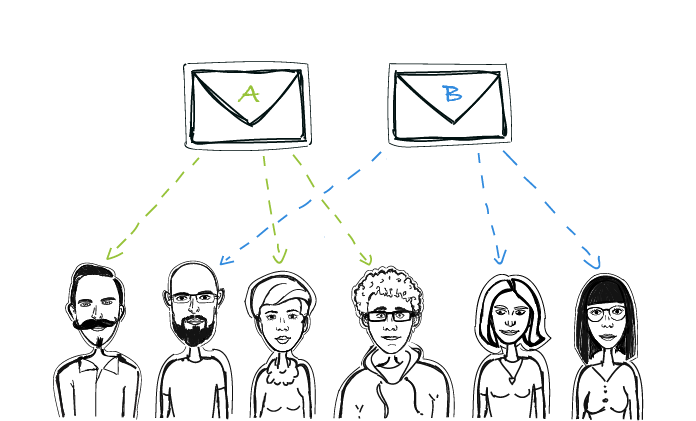

- We take our copy and graphic elements on our website.

- We choose one element or a piece of copy and prepare two alternate versions: A and B.

- We split our group of recipients into halves.

- We show or send the A version to one half of our target group, and the B version to the other half.

- We compare the effects and look for significant differences.

- On the basis of the results, we keep the version that worked better.

What parts of our email can we split test?

Do you recall the 6-step tutorial to an effective cold email I published here some time ago? It involves six elements crucial elements of cold email:

Well, we can test each of them.

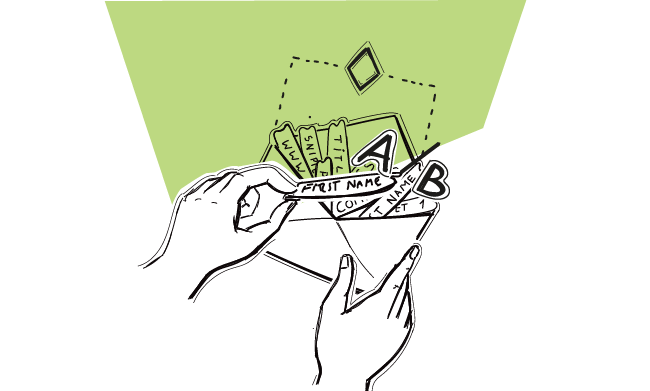

IMPORTANT: remember to test each element separately.

For instance, if we want to check the effectiveness of different subject lines, we make changes only to that part, and we keep all the other elements intact. Otherwise, we would not be able to tell which alteration actually affected the recipients – and in such a case is more of guessing than A/B testing.

How to carry out A/B tests in cold email?

As we have our group of prospects specified and our contact base is ready, we split the contact base into halves. We should keep the two groups as homogeneous as possible – this means as similar to each other as possible in terms of quantity and quality.

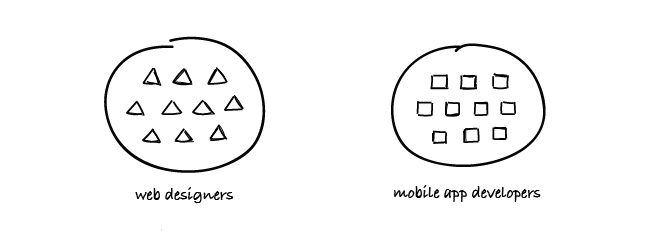

So for instance, if we have a group of web designers and a group of mobile app developers – these are not homogeneous groups.

To perform a split test in such two groups, it’s necessary to divide each of them into halves – those halves will be homogeneous groups.

It’s crucial because we want to exclude all the factors other than the change in our copy that may influence our results. The type of recipient is a variable that matters here.

EXAMPLE

So let’s say we are a company that offers development services to drive web and mobile app acquisition strategies. We want to start working with freelance designers who prepare the graphic designs themselves and outsource the coding part to developers with the necessary skills and tools (e.g., automation testing tools). Say we have a base of 200 web designers. We want to reach out to them and split test our subject line. Here’s what we do:

- We divide our group into two groups of 100.

- We prepare our cold email copy with two versions of the subject line. It’s important to prepare it as a whole so that the two different subject lines match the same copy of our body message. Say we’ve got:

Subject line Version A: Tired of cutting web designs on your own?

Subject line Version B: Your projects on Behance & a question

- What we do next is to send the emails including Version A of the subject line to the first 100 of prospects, and we send the emails including Version B of the subject line to the second 100 of prospects.

- We compare the open rates and, most importantly, the reply rates. Whichever brought better results is the subject line to keep.

What if the results are still not what we want in terms of effectiveness?

We have two ways out of such a situation:

a) We keep testing the same element; e.g. the subject line.

b) We switch to split testing another part of our email.

It’s difficult to say which part of our email has the most significant influence on our addressees. Usually, it’s a combination of several elements. That’s why A/B testing is so important.

In my example, I used the case of testing the subject line. But really, each of the six elements in cold email may influence the effectiveness of our outreach. At 52Challenges, our previous project, we observed that the From line and the email address we were sending our emails from affected the reply rates.

Emails sent from [email protected] with the from line Matt from 52Challenges brought fewer replies than those sent from [email protected] as Cathy Patalas. It’s not a universal rule, though. It all depends on our specific group of addressees.

How to compare the results quickly?

If you send your emails manually, comparing the open rates will be hardly possible. Comparing the reply rates will be possible, yet it will require laborious tracking the replies and counting.

We found a way for that in Woodpecker. The following post is a step-by-step tutorial on how to do that using our tool.

What’s in it for you?

I can only imagine that now you’re counting the hours you’d spend on improving your email copy one element after another. You probably think that you’d rather throw an email together, hit send and just see what happens.

Well, that’s always a start (just make sure your email is good, anyway). But if the first version doesn’t work the way you’d want it to, A/B testing is a good way to find out which part of your message does or does not do the job you planned for it.

So, as I always say: don’t be lazy! The work you put into the process will pay off in the long run as soon as you see more and more prospects reply your cold emails and get interested in your offer.

READ ALSO

A/B Testing in Cold Email: How to Optimize Our Copy to Get More Replies?

Split tests, or A/B tests, are a well-known method for website’s or newsletter’s copy optimization. But cold email copy also needs constant changes if it is to bring optimal effects. Here’s a little about split testing in cold email: which parts of our email can be tested, how can we test most effectively, what tools can we use for convenient comparison of results?

Personal Touch Email in Sales: 3 Steps to Write It

Three questions: What's the little big thing that makes our cold email interesting from the very first line? What makes our prospect feel that we really care about talking to them? What changes generic bulk emails into personal valuable messages? One answer: Personal touch. And note that putting in our addressee's first name in the salutation is not enough nowadays. Here's how to add a personal touch to a cold email campaign in 3 steps.

Step-by-step Guide to A/B Testing Cold Emails and Follow-ups in Woodpecker

Cold emailing requires some experimentation and learning by trial and error to get it right, trying out different subject lines or various versions of email copy to see what’s working and what’s not. And there’s no better way to find that out than by A/B testing. Today I have a pleasure to introduce you to A/B tests in Woodpecker, a new feature we’ve just rolled out. First, let’s have a closer look at what elements of your cold email you should test to improve your open and reply rates. And then I’ll show you how to carry out an A/B test step by step based on an example. Here we go.